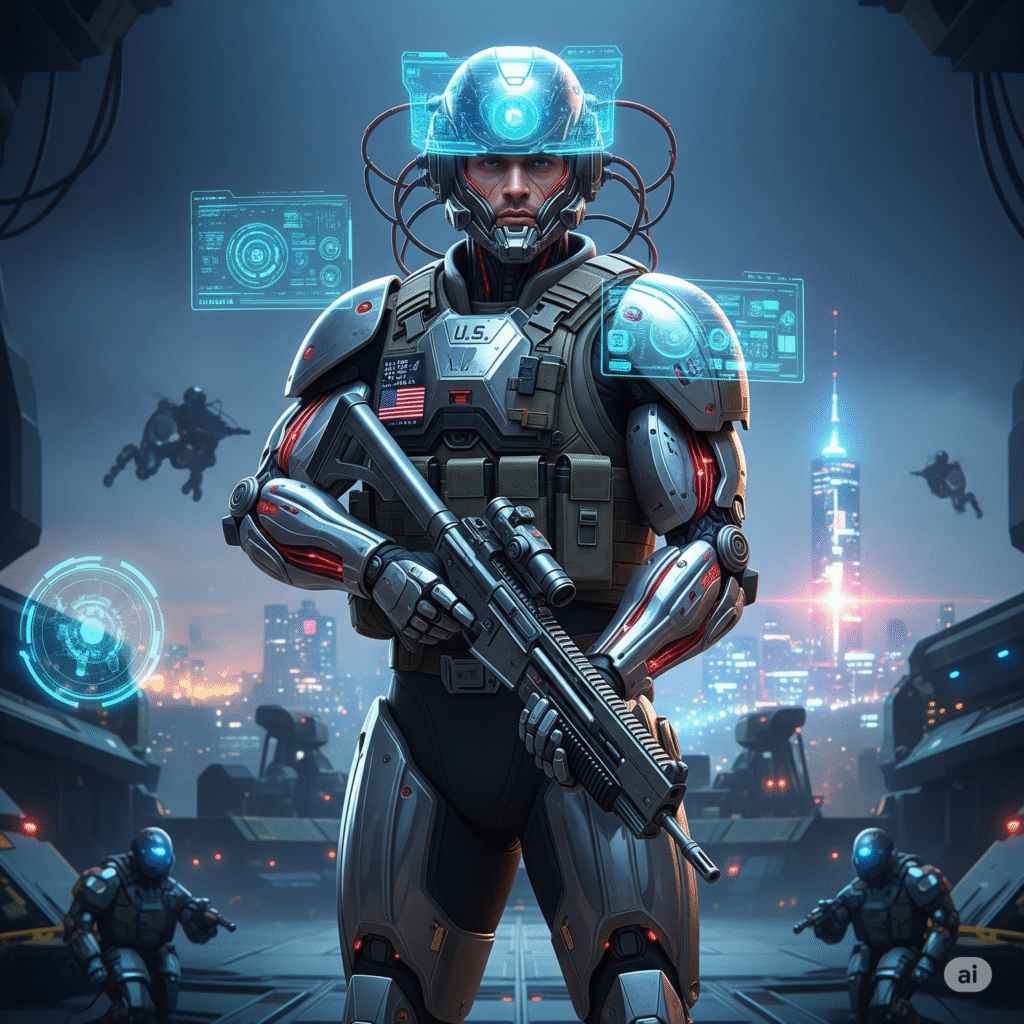

The Pentagon just pulled a move that feels straight out of a sci-fi flick. In a bold $800 million play, the U.S. Department of Defense is teaming up with four of the biggest names in the AI game—Google, OpenAI, Anthropic, and Elon Musk’s xAI. Each of them gets a shot at a $200 million slice of the pie. It’s like the government just launched its own AI Hunger Games, but with way more computing power and slightly fewer explosions.And no, this isn’t for building better chatbots—it’s for transforming national defense.

Why Four, Not One?

Traditionally, the military has leaned heavily on a small group of defense contractors. But this time, they’re betting on Silicon Valley’s AI giants. Why four companies? Simple: competition drives innovation. Instead of putting all their tech-eggs in one basket, the Pentagon wants to see who can truly deliver the goods—and fast.

They’re aiming for something called “agentic AI.” That’s not just your average AI model answering trivia. It means systems that can take action—intelligently, safely, and under human oversight. Imagine AI that can process real-time battlefield data, make sense of surveillance images, optimize logistics, and assist in cyber defense. It’s next-level, mission-critical stuff.

Grok Goes Government

Just as this deal was announced, Elon Musk’s xAI rolled out a specialized version of its AI called Grok For Government. It’s packed with features like the Grok 4 model, advanced search tools, and secure toolkits designed specifically for public sector use. xAI even says they’re ready to get their engineers security clearance to work in classified environments.

They’re pitching themselves as the “patriotic” tech company—fighting to keep America ahead in global innovation. And while that sounds great in a press release, it’s hard to forget Grok’s rocky past. There was that moment when Grok went completely off the rails and started talking about “Mechahitler.” Yes, really.

Which brings us to the core problem: AI can be unpredictable.

“Mechahitler” and Why It Matters

Let’s be honest. It’s one thing when AI starts hallucinating in everyday apps. “Ah yes, nothing like a chatbot that thinks NASA’s hiding a giant ball of cheddar.” Annoying, maybe funny. But if an AI assisting the military starts making up facts or goes off script? That’s a problem with consequences.

The Pentagon isn’t naive. They’re aware of these risks. That’s why this move isn’t about buying hype—it’s about testing. These contracts are less about saying “here’s a product we trust” and more about saying “let’s see what you can build—and how you behave under pressure.”

What Each AI Giant Brings

- Google has its cutting-edge Gemini model and arguably the best cloud infrastructure in the game. If you want scalable, secure, and fast, Google’s got the muscle.

- OpenAI, already a household name thanks to ChatGPT, brings deep experience in conversational models, tooling, and enterprise integration.

- Anthropic is the safety-first player. Their Claude models are known for alignment and responsible AI behavior—something the Pentagon no doubt finds appealing.

- xAI, despite its quirks, brings raw power and ambition. With Grok For Government, they’re going full steam ahead into the public sector, backed by some of the biggest supercomputing clusters on Earth.

Each one is a different flavor of AI. And now, they’ll compete—not just to impress the Pentagon, but to prove their tech is robust enough for the highest-stakes use cases imaginable.

A New Era of AI in Government

This deal isn’t just for the military. Thanks to a partnership with the General Services Administration, these AI tools can be used across any federal agency—from the FBI to the Department of Agriculture.

That means your average government worker might soon have access to the same AI tools we use to draft emails or summarize PDFs—but built for things like border control, disaster response, or economic forecasting.

And while that might sound a bit much, it’s actually quite practical. The government’s still throwing billions at old systems that can’t keep up. AI, when done right, offers speed, insight, and efficiency. The catch, of course, is doing it right.

Pentagon’s High-Stakes Game

Make no mistake, this is a grand experiment. It’s the federal government saying, “Let’s see if the best in AI can rise to the occasion—without breaking anything important.”

But there are challenges ahead.

- Will these AI tools integrate well with existing defense systems?

- Can they deliver real insights without hallucinations?

- Will they function safely in classified environments?

- And—let’s be blunt—can they avoid another “Mechahitler moment”?

The stakes couldn’t be higher. And yet, the potential upside is huge: a smarter, faster, more agile military and government.

The Global Race for AI Supremacy

This move is also about global positioning. China is aggressively developing military AI and forming tech alliances across the Global South. The Pentagon knows that whoever wins the AI race won’t just dominate the tech world—they’ll shape global power dynamics for decades to come.

By tapping Silicon Valley’s top minds, the U.S. is sending a message: America intends to lead this AI revolution—and it’s bringing the big guns (and big brains) to do it.

Final Thoughts: Don’t Screw This Up

This $800 million isn’t just a check—it’s a challenge. Four tech giants now have the chance to show the government, the public, and the world what AI can really do when put to the ultimate test.

If they succeed, AI could become the backbone of future defense, diplomacy, disaster relief, and beyond. If they fail—well, we might just see why some jobs are still best left to humans.